Key idea. Cognitive scientists have embraced Hume, but to understand the mind, they must also take a Kantian perspective.

In A Tale of Two Summer Conferences: ISRE-2015 and CogSci 2015, I lamented the direction of research in AI and other cognitive sciences. There is an obsession with numeric approaches to understanding the mind (Bayesian models, dynamic systems, connectionism, empiricism, data analytics, empiricism, etc.)

When I started my Ph.D. thesis in 1990 at what was then Sussex University’s School of Cognitive and Computing Sciences I began to suspect that something was awry. Many people were turning to connectionism to understand the human mind. I felt that, perhaps, they were too eager to apply powerful cognitive tools — quantitative mathematics — that had served them well in high school and bachelor’s studies, but that were not up to the new challenges before them. While listening to a recent Rationally Speaking Podcast episode on Why the brain might be rational after all, Prof. Tom Griffiths said something which captured the impression I have had confirmed so many times since then.

As a high school student I’d done a lot of math and so on, and then when I went to university I was really just interested in learning about things like philosophy and psychology that I’d never had the chance to study before. This was the moment where I saw that it was possible for those things to come together, that it was possible to think about studying minds from a mathematical perspective, and so I just spent that summer doing calculus and linear algebra, and reading books about neural networks and deriving models, and coming up with a grand unified theory of how the mind works. That was just I think a very exciting experience for me.

Then on the first day of the next semester I found the person on campus who did research that was most closely related to that, and at 9:00am on the first day of class cornered him in a corridor and talked to him about letting me be a research assistant. That’s what set me off on this path of thinking about the mathematical structure of the mind.

I do not mean to criticize Griffiths’ research. For although the quote is apposite to my post, the tools he uses seem to me to be very pertinent to the way he frames his questions. The quote nevertheless aptly illustrates something that you won’t find mentioned explicitly in journal articles, i.e., how previous academic training influences research careers. (Here at CogZest and in my scholarly research, we are interested in understanding and assisting knowledge workers mental development.) In particular, it illustrates how students interested in cognition are drawn to study it using tools with which they are already familiar.

I had a related experience when I started my Ph.D. at Sussex. In my undergraduate AI work, I had used Smalltalk and Pascal to implement neural nets and other models. Professor Aaron Sloman, who was my Ph.D. thesis supervisor, advised me to use an AI programming language, Poplog Pop11. Although I enjoyed computer programing, I didn’t think it mattered much which language I used: It was just a tool, an implementation detail. I didn’t want to invest in a new programing language, I wanted to focus on requirements, design, algorithms and theoretically relevant representations. Sloman tried to explain to me that the language I wanted to use wasn’t up to the job, and that I should use this opportunity to learn and apply the more powerful modeling capabilities that Pop-11 provides. One must, after all, use the right tool for the job. As I delved more deeply into programming with Pop-11, it became clear to me that Sloman was right in more ways than I could previously have imagined.

Pop-11 is a unique, fabulously mind-stretching programming language that allows you to think about computational issues that are very difficult to represent in languages like C, Pascal and even Smalltalk. It has an incremental compiler, optional dynamic typing, various object-oriented programming choices (including objectclass, with multiple inheritance and multi-methods), an open-stack architecture, an extensible syntax, accessible virtual machines, extensive list processing facilities, and much more. Poplog provides access to Lisp, ML and Prolog subsystems. I fell in love with Pop-11. Using Pop-11 extensively extended and reconfigured my conceptual “ontology”. For instance, it allowed me to understand the concept of virtual machine, and to understand one of Aaron Sloman’s very deep insights about the human mind: The human mind is a collection of interacting, self-programming virtual machines.

If I had stuck to the tools with which I was familiar, I don’t think I would have been able to produce a thesis that satisfied me as much as mine did. It’s not that I produced a glowing implementation. That was not my goal. But I think that lower level tools and more quantitative representations would have held me back.

Fast forward to CogSci-2015, where I had occasion to discuss the quantitative-bias trend with some illustrious, long-time AI researchers. Their lament: cognitive scientists (including AI researchers) are changing the AI program to better fit the techniques they learned in high school and as undergraduates. It’s like looking for one’s keys where the light happens to be shining but where they aren’t.

Luiz Pessoa and I discussed a related issue over email last year with respect to his rejection of architectural models of mind. In The Cognitive Emotional Brain, Pessoa argued that mental resources should be treated as varying continuously (not discretely). I told him that

I can see that at an external, mathematical (almost Gibsonian) level, Kruglanski et al. 2012 (“The energetics of motivated cognition: A force-field analysis” ) may be helpful […].

[However, it] is a common fallacy to assume that the only alternative to dichotomy is continuity. But mathematically it is not [the only one]. We may be dealing with numerous discrete resources (in an omniscient description specified as natural numbers not reals). It gets complicated because the “resources” could presumably be themselves hierarchical.

to quote Cognitive Productivity, quoting Sloman:

For example, “We should not presuppose a sharp, clear, boundary between non-biological and biological structures and processes: seeking dichotomies and precise definitions often obstructs science. The alternative to a dichotomy is not necessarily a continuum, with fuzzy transitions: it is also possible to have a very large collection of discontinuities, big and small, including branching and merging discontinuities.” (Sloman, 2012a p. 53) The meta-morphogenesis (MM) project (or meta-project?).So, I grant the continuous framework is better at a certain level than the binary one; however, this does not necessarily make it better than all discrete formulations. The concept of “resources” (plural) does suggest numerable, natural numbers. “Force” of course is a continuous notion.

To a certain extent, this may reflect training biases or maybe some other psychological bias. For whatever reason, some of us are inclined towards quantitative frameworks, some towards discrete ones.

Pessoa wrote back:

I agree about “training biases”. To me the discrete is not appealing, not even the multilevel case. Ultimately, competition mechanisms at multiple levels and scales determine behavior. So there may be a large number of “levels” but one might as well as thinking [sic] continuously in most cases.

It seems that some minds ‘simply’ tend to think more as Kantians, in search of deep structural insights, others in more Humean ways, preferring more fluid, quantitative, empirical, stochastic models. (I’m obviously lumping a lot of different proclivities together here in this short blog post.)

I’m sure that early critics of AI would find the criticism of overly quantitative tool wielding ironic coming as it is from us ‘old time’ AI researchers. For many of them had what they might think is a similar complaint against AI. AI researchers, they argued, are trying to understand the mind with the wrong metaphor, the “computer metaphor.” However, I would argue that there are important differences. AI has in fact struggled with its major metaphor. “Computer metaphor” is apt to be misunderstood as commercial computer metaphor. Finding the right label for AI has been part of the problem. The expression “computational psychology” has often be used. But it seems that many people interpret this as “mind as number processor”. And this perhaps has provided justification for quantitatively bent minds to divert the AI research program. “Information processing” is a slightly better label. The information processing metaphor is more general than what the critics had in mind. But there is no single adequate label.

The battles between “classical” AI, connectionist and “hybrid” AI researchers of the 1980s are of course pertinent to this discussion.[1] One might argue that connectionists have won those battles — but no one alive today will be around when the “war” itself is over. AI is too ambitious a research programme for that. In any event, the way this “battle” was framed and the particular arguments that were expressed on various “sides” are partly to blame for the current direction of cognitive science.

Given that high school and undergraduate training emphasize quantitative mathematics over logic and geometry, cognitive science (including AI) is likely to continue in its overly quantitative way for a long time.

Similarly, science is highly empirical. Most university professors have not themselves been taught how to develop theories. Just open any psychology research method textbook and look for chapters on conceptual analysis or on how to develop theories from the designer stance… Undergraduates are taught to test theories, not to develop them. The vast majority of Ph.D. theses in psychology are empirical tests. I was offered an admission to McGill University’s Ph.D. program in 1990. I asked my would-be advisor, Prof. Thomas Shultz: “Has a graduate student Psychology at McGill ever successfully defended a theoretical Ph.D. thesis?” Not to his knowledge. If Einstein was born in the 1960s and wanted to study the mind at McGill, would he have collected data for his supervisor? So I declined my NSERC scholarship and accepted Commonwealth and FCAR scholarships to study at the Sussex “COGS” program, where theoretical research was the norm. That COGS was a rare program indeed — and it no longer exists. Future generations are likely to be Humean/quantitative folk just like their professors.

I certainly do not wish to downplay the importance of quantitative models or of empirical research. I will not offer any specific solution to the problems I am circling here. In fact, I have not even specified the problems precisely. I’m just alluding to the need to understand the understanding of the mind, and suggesting that understanding (which, in the case of humans is not merely a “high level” cognitive process: it pervades perception, memory, etc.) cannot adequately be characterized using mainly quantitative tools.

I don’t know that there has been a proper review of quantitative AI research to substantiate the admittedly vague claims I am making. It is the eponymous topic of one the books I am currently writing, Discontinuities.

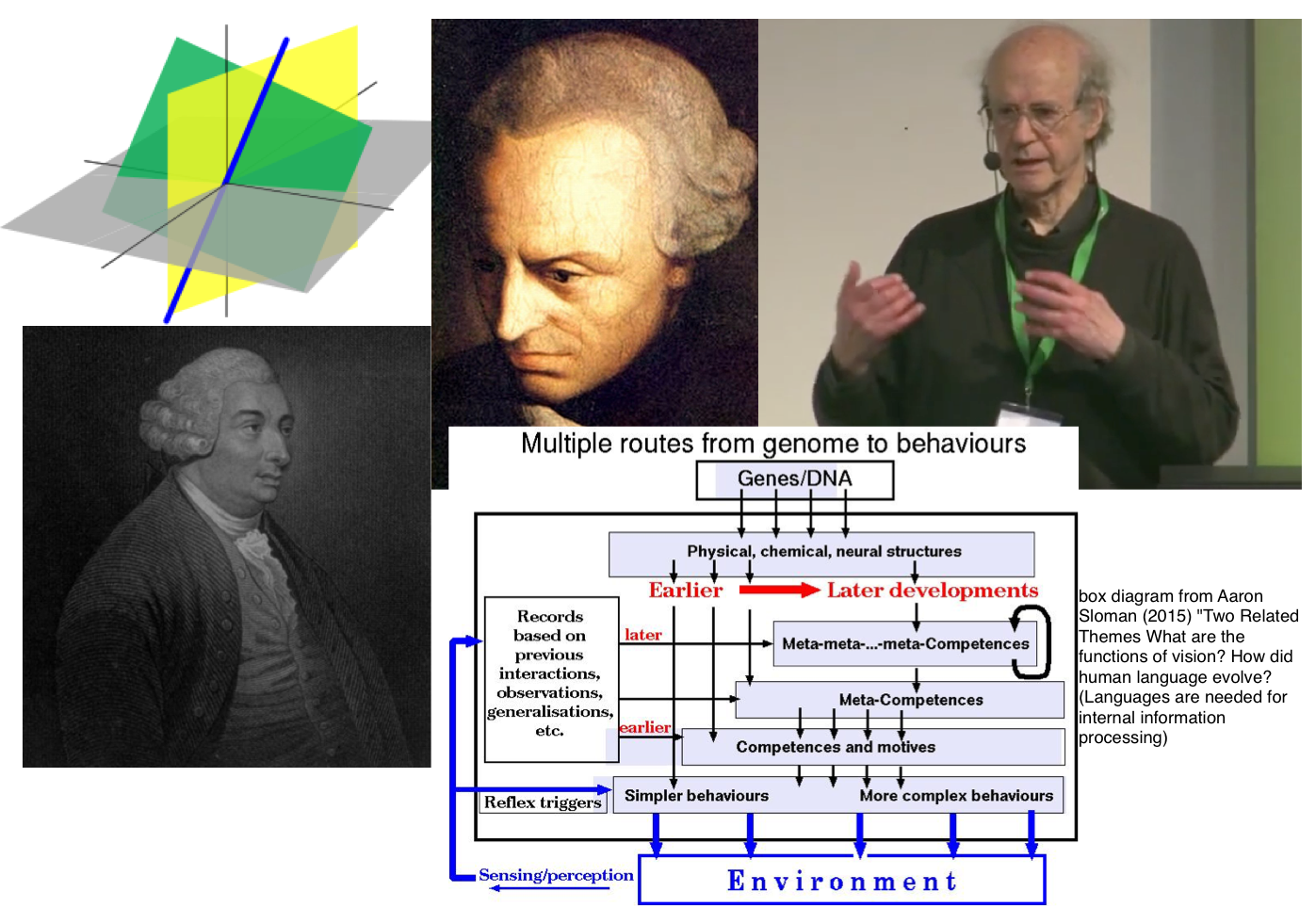

Professor Sloman, one of the deepest and most prolific minds in AI, was a Rhodes Scholar in mathematics. In his Ph.D. thesis, On Understanding and Knowing, Sloman laid a framework for understanding the understanding of mathematics in particular and understanding in general.

In a new preamble to his 1962 thesis, Sloman commented a few days ago on the issues that I am getting at in this post:[2]

Unfortunately research in AI has recently become dominated by the assumption that intelligent agents constantly seek and make use of empirically based statistical regularities: this approach cannot shed light on discovery of non-statistical, structure-based regularities. The AI work that does attempt to model non-empirical (e.g. logical, mathematical) reasoning mostly assumes that that can be done by building machines that are presented with sets of axioms and rules of inference and abilities to determine which formulae are or are not derivable from the axioms by the rules. This ignores the possibility that there might be a prior form of discovery leading to the axioms and rules. This thesis suggests that that could be based on abilities to examine procedures and work out their constraints and powers, meta-cognitive abilities that go far beyond abilites to simply follow pre-specified rules and constraints. The vast majority of computers that can do the latter cannot (yet) do the former, which is not generally recognized as one of the aims of AI. In other words, AI is diminished by not yet being sufficiently Kantian.

While Aaron’s thesis clearly influenced much of his later work in AI/cognitive science, he has returned to some of its original thrusts in his Meta-Morphogenesis Project. Sloman has framed his project as a generalization of Alan Turing’s morphogenesis paper, published two years before Turing’s death.

(Another historical aside: In 1989 I took a one-on-one self-directed reading course on causal reasoning. My professor was Dr. Pierre Mercier, then a Humean researcher of causal judgments. My only deliverable for the course was a paper. I argued against treating causal reasoning purely as covariation detection. Adopting a neo-Kantian position, I argued that people can perceive causal mechanisms. I had been influenced by Dr. Claude Lamontagne. When I applied to be Sloman’s research student, I don’t think I was aware of how deeply and distinctively Kantian Prof. Sloman’s work was. I didn’t realize AI was going down the route I criticized in that undergraduate paper.)

Coming back to the issue of biases: while this is an over-simplification, I get a sense when talking to cognitive scientists, or studying their work, whether they lean more towards Kantian or Humean ways of thinking. We definitely live in Humean times. Hume, who is quite easy to read, gets high praise. Kant, whose work is quite demanding to read, tends to be rejected with disdain —rejected by more people than those who have read his work.

I was going to say that Kant appeals more to logicians and philosophers than to cognitive scientists. However, while I am confident that psychologists are generally Humean, I don’t read as much philosophy. And some of the most prominent philosophers in cognitive science are Humean. (Compare Andy Clark’s recent work on the mind as a quantitative prediction machine, for example.) So maybe the philosophy of cognitive science will go down the Humean route too.

If Sloman and I are correct, however, then cognitive science had better question its methodological assumptions. Otherwise it will not make headway on the deepest problems that research from the designer stance was originally meant to solve.

References

Aaron Sloman (University of Birmingham)

- (1962) On Understanding and Knowing. (Doctoral dissertation). Oxford University, Oxford UK. Retrieved from http://www.cs.bham.ac.uk/research/projects/cogaff/sloman-1962/.

-

(2013). Metamorphogenesis – How a Planet can produce Minds, Mathematics and Music. (youtube video)

-

(2016). The Meta-Morphogenesis (M-M) Project (or Meta-Project?)

-

(2015) Talk 111: “Two Related Themes: What are the functions of vision? How did human language evolve? (Languages are needed for internal information processing).

Other

- Beaudoin, L. P. (1994). Goal processing in autonomous agents. (Doctoral dissertation). University of Birmingham, Birmingham UK. Retrieved from (http://www.sfu.ca/~lpb/tr/Luc.Beaudoin_thesis.pdf

- Beaudoin, L. P. (2015), Cognitive Productivity: Using Knowledge to Become Profoundly Effective. BC: CogZest.

- Clark. A (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences, 36(03), 181–204.

- Pessoa, L. (2013). The cognitive-emotional brain. Cambridge, MA: MIT Press.

- Galef, J. Tom Griffiths on “Why your brain might be rational after all”. Rationally Speaking Podcast 154..

- Turing, A. M. (1952). The chemical basis of morphogenesis. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, 237, 37–72.

Footnotes

[1]. There are still articles being published vaunting connectionism against the “classical AI” straw person.

[2]. A new digitization of his thesis has been published but currently needs proofreading. So if in doubt, refer to the non-OCR’d PDF image also available on his web site. I am doing some of this proofreading (and reading for understanding). Reading Sloman’s thesis is helping me to better understand the mind, and Sloman’s work. I intend to write a historical biography of Sloman in a future book.

In response to Pessoa’s claim “that mental resources should be treated as varying continuously (not discretely)” we can ask a variety of questions about what goes on in his brain when he counts things, when he does an arithmetical calculation in his head (or checks one on paper), when he understands the difference between “The boy in the big room put the cat in the front room” and “The boy in the front room put the cat in the big room”, when he checks a logical derivation or algebraic manipulation for errors, when he understands a proof of pythagoras’ theorem, when he notices a speling mistake in what he reads, when he (or someone else) sight-reads a piano score, or when he thinks about the examples on this web site: http://www.cs.bham.ac.uk/research/projects/cogaff/misc/changing-affordances.html